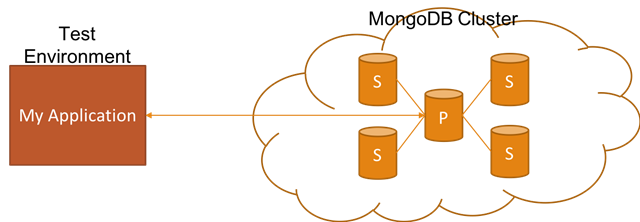

Easily setting up realistic non-production (e.g. dev, test, QA, etc.) environments is really critical in order to reduce the feedback loop. In this blog post, I want to talk about how you can achieve this if your application relies on MongoDB Replica Set by showing you how to set it up with Docker for non-production environments.

Docker (5)

Token based authentication is a fairly common way of authenticating a user for an HTTP application. However, handling this in a load balanced environment has always involved extra caring. In this post, I will show you how this is handled in ASP.NET Core by demonstrating it with HAProxy and Redis through the help of Docker.

I am going to be at a few conferences in upcoming weeks and I would like to share them here with you. If you are going to be around for any of the below events, let's meet and say hi to each other :)

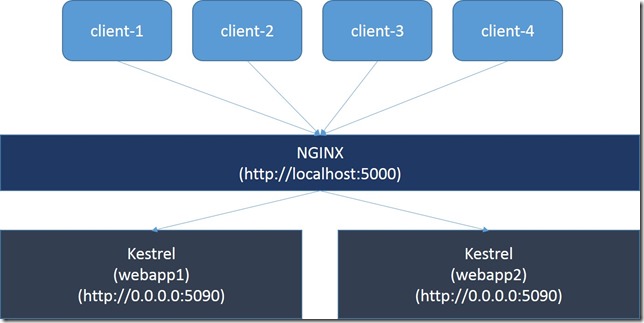

In this post, I want to show you how it would look like to expose ASP.NET 5 through NGINX, provide a simple load balancing mechanism running locally and orchestrate this through Docker Compose.

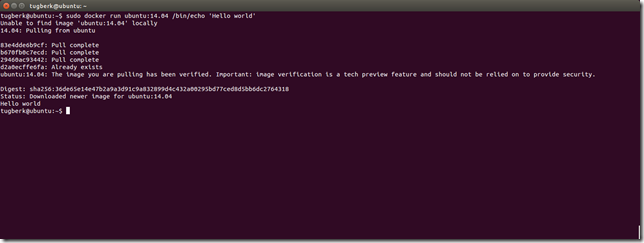

I have been also looking into Docker for a while now. In this post, I am planning to cover what made me love Docker and where it shines for me.