Last week, I have moved all my personal compute and storage from Azure to AWS, and started managing it through terraform. While doing so, I discovered that you can actually have SSL for your web application without any additional charges when using AWS Application Load Balancer. Setting it up required a few pieces to stich together, and I wanted to share how I configured it through Terraform.

HTTP (11)

Token based authentication is a fairly common way of authenticating a user for an HTTP application. However, handling this in a load balanced environment has always involved extra caring. In this post, I will show you how this is handled in ASP.NET Core by demonstrating it with HAProxy and Redis through the help of Docker.

I want to share a few thoughts that I have been keeping to myself on showing progress for long-running asyncronous operations on a system where individual events can be sent during ongoing operations.

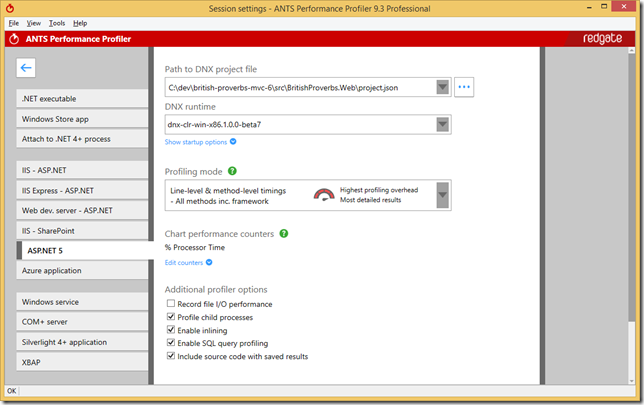

ANTS Performance Profiler from Redgate supports ASP.NET 5 applications running on DNX and it allows you to profile your ASP.NET 5 applications to spot performance problems in a really easy and unobtrusive way. In this blog post, I will show you how it can help you with a sample.

This is a brain dump blog post which I usually don't do but I needed to get this out of my chest. Restaurants and software applications have some common characteristics in terms of how they need to work and this post highlights some of them.

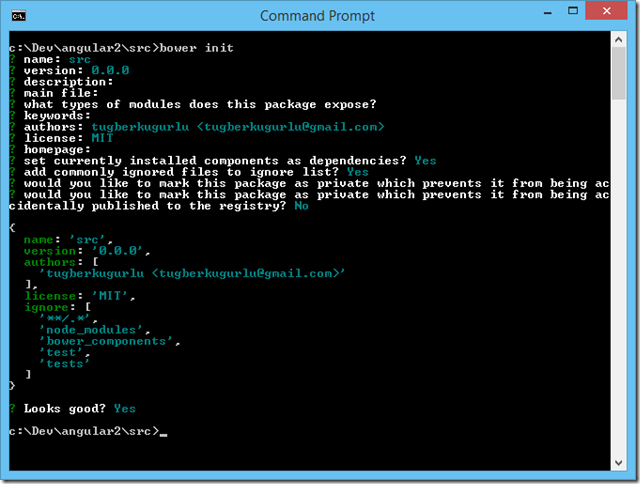

In this post, I'll walk you through how you can set up your environment from scratch to get going with ASP.NET vNext.

Yesterday, I was looking for something to have a really quick test space on my machine to play with AngularJS and I found http-server: a simple zero-configuration command-line http server.

Downloading large files with HttpClient and you see that it takes lots of memory space? This post is probably for you. Let's see how to efficiently streaming large HTTP responses with HttpClient.

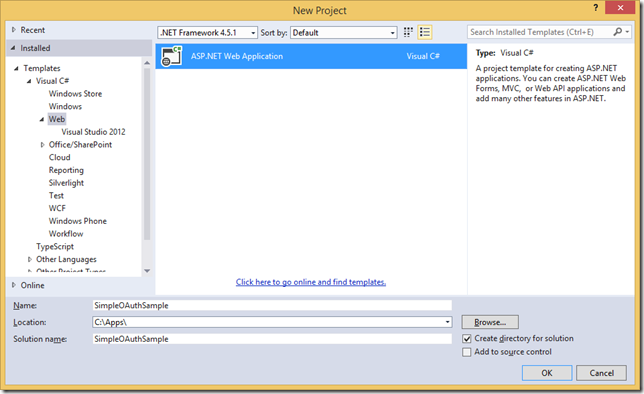

In my previous post, I emphasized a few important facts on my journey of building an OAuth authorization server. As great people say: "Talk is cheap. Show me the code." It is exactly what I'm trying to do in this blog post. Also, this post is the first one in the "Simple OAuth Server" series.

Securing our HTTP API endpoints are one of the biggest challenges we face when writing so-called modern applications and this is where the OAuth 2.0 enters. In this post, I will highlight the things that I have found vital for the last couple of months when I have been working on an OAuth 2.0 Server implementation in .NET Framework.