Task class has a static method called FromResult which returns an already completed (at the RanToCompletion status) Task object. I have seen a few developers "await"ing on Task.FromResult method call and this clearly indicates that there is a misunderstanding here. I'm hoping to clear the air a bit with this post.

async (11)

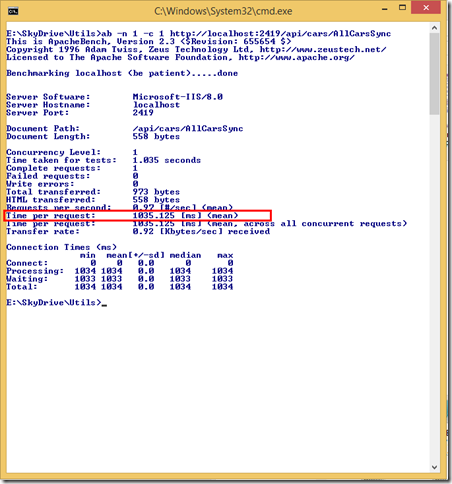

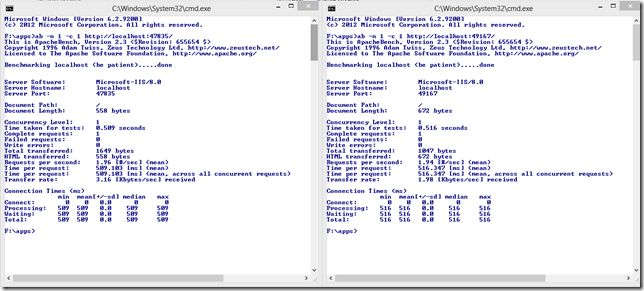

When we have uncorrelated multiple I/O operations that need to be kicked off, we have quite a few ways to fire them off and which way you choose makes a great amount of difference on a .NET server side application. In this post, we will see how we can handle the different approaches in ASP.NET Web API.

This weekend, I participated in an event as a speaker in Izmir, DEU Bilgisayar Topluluğu Izmir 2. Teknoloji Zirvesi. Sides and Source Code for my sessions are available.

I listed some resources on asynchronous programming for .NET server applications with C# which consist of blog posts, presentations and podcasts.

Why am I not using NancyFx instead of ASP.NET MVC / Web API? Because of a very important and vital missing part with NancyFx: asynchrony!

Couple of hours ago, @DamianEdwards has announced that ASP.NET SignalR Alpha 1.0.0 release is now publicly available! Even better! SignalR has just shipped with ASP.NET Fall 2012 Update!

Writing asynchronous .NET Client libraries for your HTTP API and using asynchronous language features (aka async/await) and some deadlock issue you might face.

How to most efficiently deal with asynchrony inside ASP.NET Web API HTTP Message Handlers with TaskHelpers NuGet package or C# 5.0 asynchronous language features

Let's see how we can end up with a deadlock using the C# 5.0 asynchronous language features (AKA async/await) in our ASP.NET applications and how to prevent these kinds of scenarios.

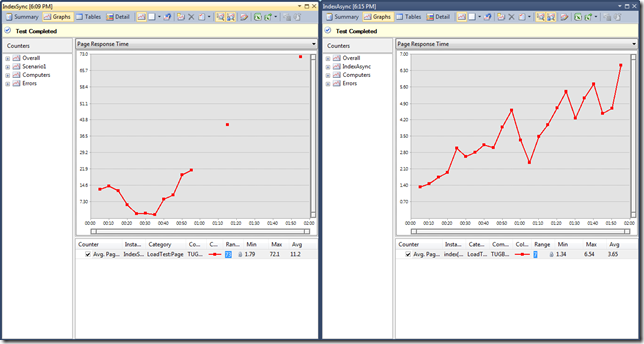

Asynchronous Database Calls With Task-based Asynchronous Programming Model (TAP) in ASP.NET MVC 4 and its performance impacts.

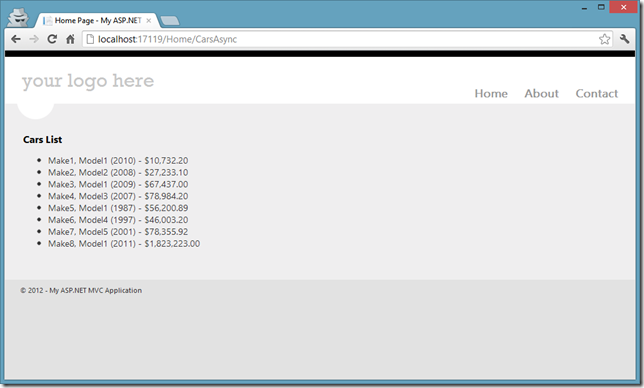

I'm trying to show you what new C# 5.0 can bring us in terms of asynchronous programming with await keyword. Especially on ASP.NET MVC 4 Web Applications.