Token based authentication is a fairly common way of authenticating a user for an HTTP application. However, handling this in a load balanced environment has always involved extra caring. In this post, I will show you how this is handled in ASP.NET Core by demonstrating it with HAProxy and Redis through the help of Docker.

ASP.Net (101)

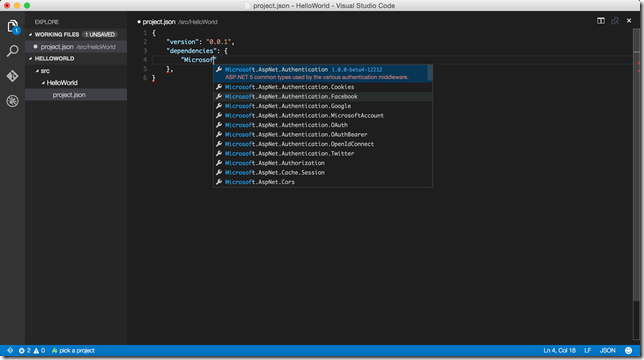

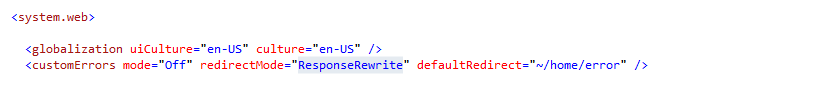

.NET Core Runtime RC2 has been released a few days ago along with .NET Core SDK Preview 1. At the same time of .NET Core release, ASP.NET Core RC2 has also been released. While I am migrating my projects to RC2, I wanted to write about how I am getting each stage done. In this post, I will show you the tooling aspect of the changes.

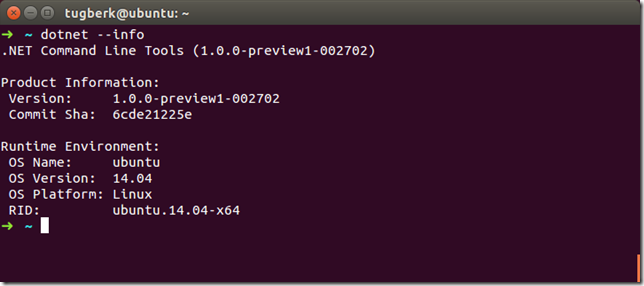

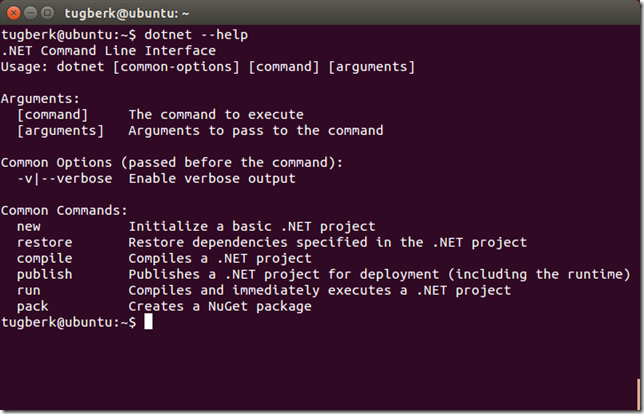

dotnet CLI tool can be used for building .NET Core apps and for building libraries through your development flow (compiling, NuGet package management, running, testing, etc.) on various operating systems. Today, I will be looking at this tool in Linux, specifically its native compilation feature.

I was at Umbraco UK Festival 2015 in London a few weeks ago to give a talk on Profiling .NET Server Applications and the session is now available to watch.

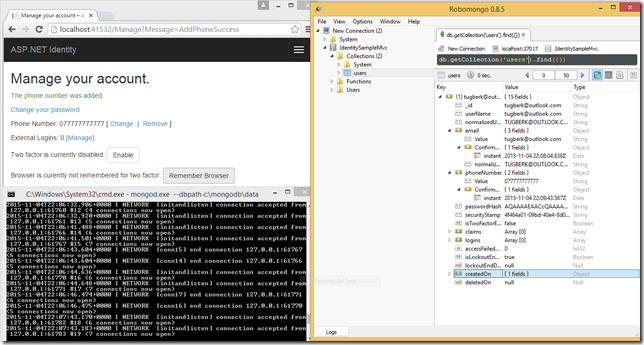

ASP.NET Identity will have a new version with ASP.NET 5 which is going to be version 3.0.0 and I gave it shot to implement ASP.NET Identity MongoDB data store.

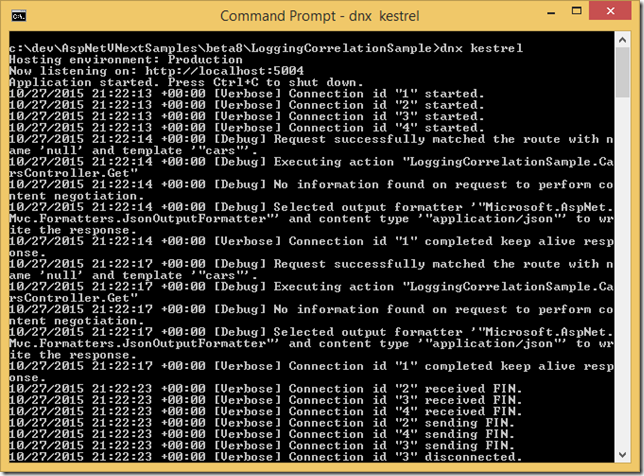

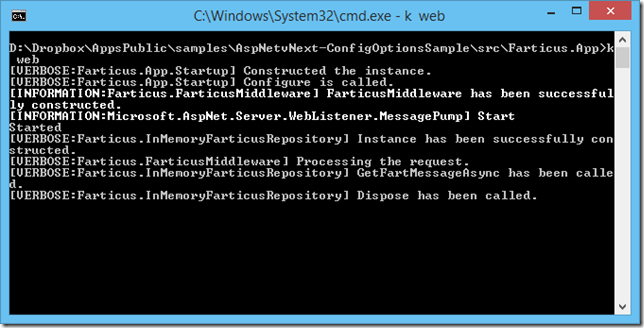

ASP.NET 5 is full of big new features and enhancements but besides these, I am mostly impressed by little, tiny features of ASP.NET 5 Log Correlation which is provided out of the box. Let me quickly show you what it is in this post.

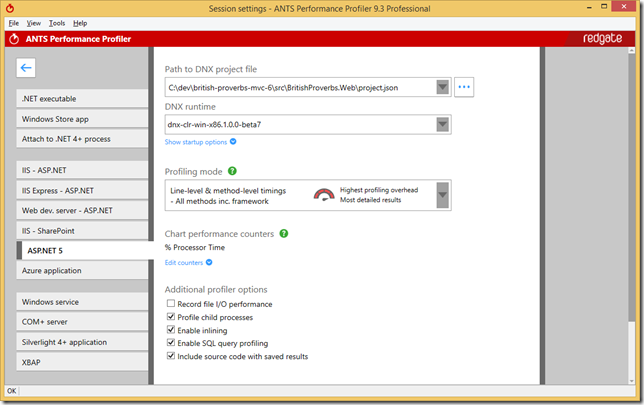

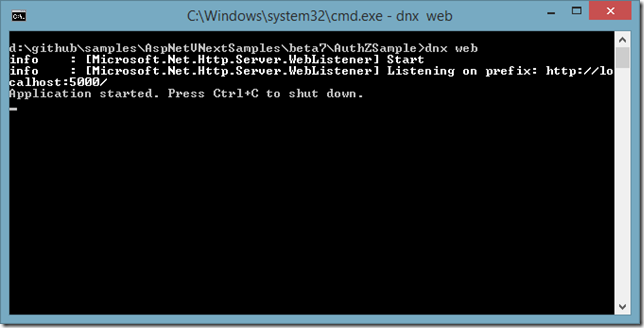

ANTS Performance Profiler from Redgate supports ASP.NET 5 applications running on DNX and it allows you to profile your ASP.NET 5 applications to spot performance problems in a really easy and unobtrusive way. In this blog post, I will show you how it can help you with a sample.

Wondering why IHostingEnvironment.IsDevelopment returns false even when you are on you development machine? I did indeed wonder and here is why :)

Web European Conference 2015 will happen in Milan on the 26th of September and I will be talking about ASP.NET 5 there!

Last Friday, I was at Progressive .NET Tutorials 2015 in London and I gave two talks on ASP.NET 5. Here are the recording videos and slides of my two ASP.NET 5 talks!

I have a few speaking activities lined up in upcoming weeks on ASP.NET 5 and DLM and I thought it would be good to share these with you all :)

Today is one of those awesome days if you build stuff on .NET platform. They announced bunch of stuff during Build 2015 keynote and one of them is Visual Studio Code, a free and stripped down version of Visual Studio which works on Mac OS X, Linux and Windows. Let me give you my highlights in this short blog post :)

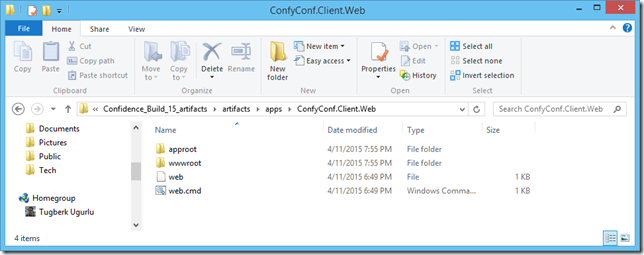

ASP.NET 5 application has totally a different directory structure when you try to publish it and it wasn't clear for me how Azure Web Apps is actually able to host an ASP.NET 5 application. If you are confused on this as well, the answer is here.

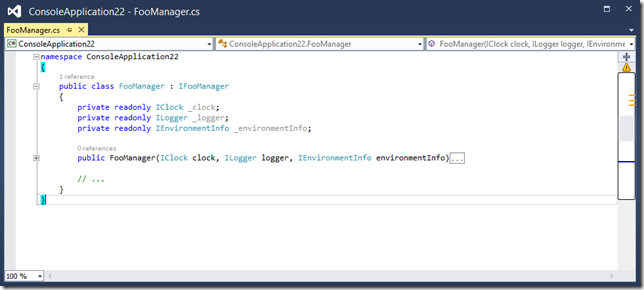

Reasons on why I prefer dependency injection over static accessors.

From the very first day of ASP.NET vNext, per request dependencies feature is a first class citizen inside the pipeline. In this post, I'd like to show you how you can use this feature inside your middlewares.

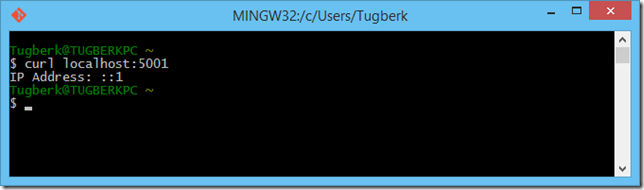

I was wondering about how to get client’s IP address inside an ASP.NET vNext web application. It’s a little tricky than it should be but I finally figured it out :)

I was at Microsoft Turkey office yesterday and I gave a presentation on ASP.NET Web API and SignalR in context of this year’s MSP Kickoff. This post covers where you can reach out to presentation slides, recording and several relevant links.

Wanna see ASP.NET vNext and Gulp working together? You are at the right place :) Let's have look at gulp-aspnet-k, a little plugin that I have created for ASP.NET vNext gulp integration.

A few days ago, I started a new blog post series about ASP.NET vNext. Today, I would like to talk about something which is MVC specific and takes one of our pains away: view components :)

Visual Studio CTP 3 has launched a while back and I was expecting to have trouble working with ASP.NET vNext beta builds. I was partially right. I wasn’t able to run the web application from Visual Studio. However, it’s still possible to debug the application and I have a workaround for you :)

As of today, I am starting a new blog post series about ASP.NET vNext. To kick things off, I would like to lay out the resources about ASP.NET vNext here which is probably going to be an ultimate guide on ASP.NET vNext.

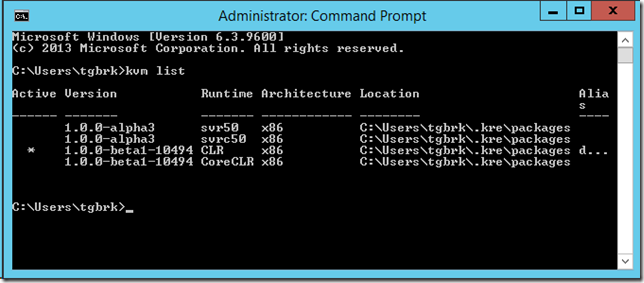

In this post, I'll walk you through how you can set up your environment from scratch to get going with ASP.NET vNext.

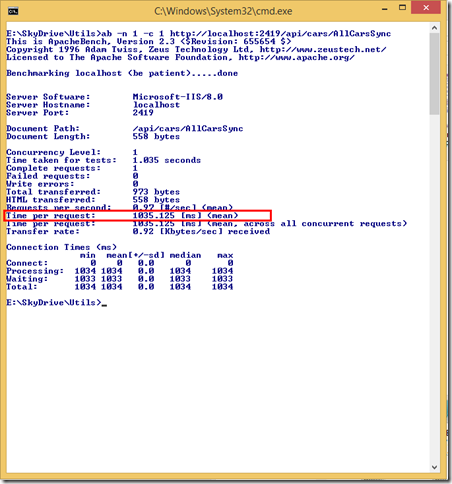

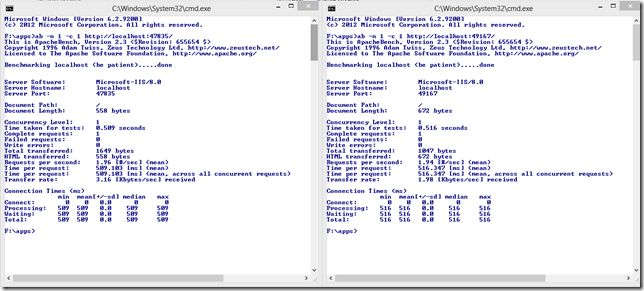

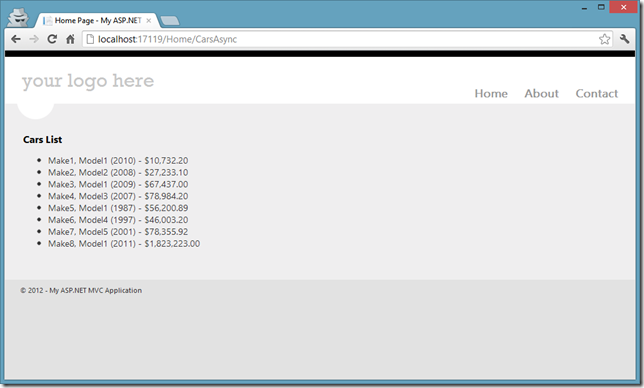

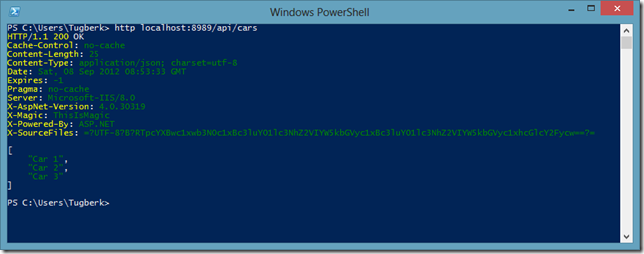

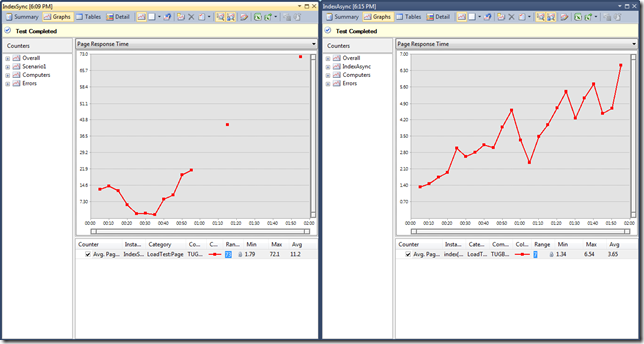

When we have uncorrelated multiple I/O operations that need to be kicked off, we have quite a few ways to fire them off and which way you choose makes a great amount of difference on a .NET server side application. In this post, we will see how we can handle the different approaches in ASP.NET Web API.

A while back, ASP.NET team has introduced ASP.NET Identity, a membership system for ASP.NET applications. Today, I'm introducing you its RavenDB implementation: AspNet.Identity.RavenDB.

Today, I am very proud to say that Pro ASP.NET Web API Book is now shipped and available on Amazon for paperback sales :)

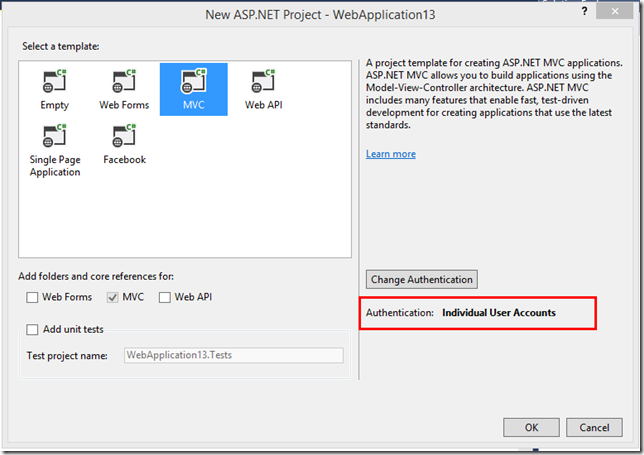

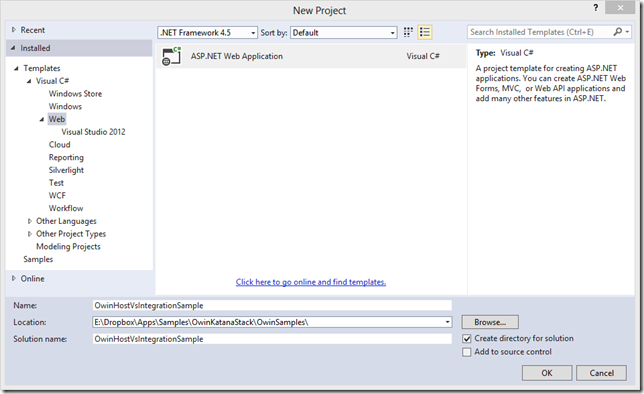

With Visual Studio 2013 RC, we are introduced to a new extensiblity point: External Host. This gives us the F5 experience Wwth OwinHost.exe on VS 2013 and this post walks you through this feature.

I was at Microsoft's Turkey headquarters giving talks on Microsoft Web Stack for Microsoft Summer School and here are presentation samples and links for .NET Web Stack

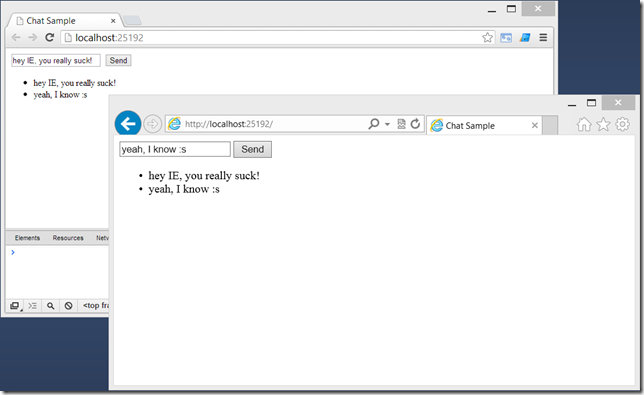

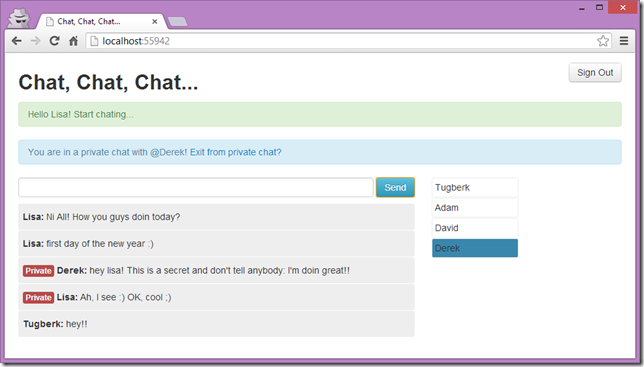

Learn how easy to scale out SignalR with a Redis backplane and simulate a local web farm scenario with IIS Express

A few days ago, I presented on a webcast about ASP.NET SignalR and real-time web application scenarios in Turkish and its recording is now available.

Web Camp Istanbul was held at Microsoft Istanbul office yesterday and here are the links, source code and slides from my talks

One leg of Microsoft Web Camps spring 2013 tour will be held in Microsoft Istanbul office on the 6th of April, 2013.

I was at MSFT Istanbul office yesterday to give a presentation on Microsoft Web Stack for MSPs. Slides and samples are now available online.

If you enabled tracing on your ASP.NET Web API application, you may see a dispose issue for IDependencyScope. Here is why and how you can workaround it.

This weekend, I participated in an event as a speaker in Izmir, DEU Bilgisayar Topluluğu Izmir 2. Teknoloji Zirvesi. Sides and Source Code for my sessions are available.

I listed some resources on asynchronous programming for .NET server applications with C# which consist of blog posts, presentations and podcasts.

One of the common questions about SignalR is how to broadcast a message to specific users and the mapping the SignalR connections to your real application users is the key component for this.

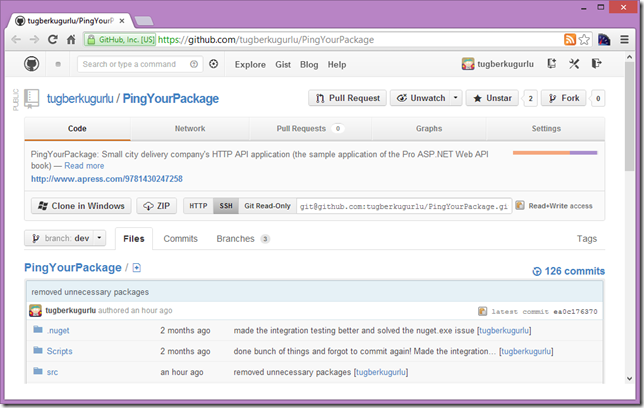

We wanted to give you an early glimpse on the Pro ASP.NET Web API's sample application(PingYourPackage) and its source code is now up on GitHub.

Why am I not using NancyFx instead of ASP.NET MVC / Web API? Because of a very important and vital missing part with NancyFx: asynchrony!

SiganlR - Real-time Web Applications webcast recording (in Turkish) is available. The video is available on NedirTV and Vimeo.

Advanced ASP.NET Web API Webcast Offline Recording (In Turkish) is Available

The slide and the full source code of the Advanced ASP.NET Web API webcast (Turkish) is available.

Couple of hours ago, @DamianEdwards has announced that ASP.NET SignalR Alpha 1.0.0 release is now publicly available! Even better! SignalR has just shipped with ASP.NET Fall 2012 Update!

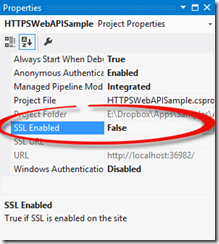

We will see how to smoothly work with IIS Express Self-signed Certificate, ASP.NET Web API and HttpClient by placing the self-signed certificate in the Trusted Root CA store.

One of the common misconceptions about ASP.NET Web API is that it is being built on top of ASP.NET MVC. Today, I am going to break it!

Two days ago, I blogged about the availability of the Pro ASP.NET Web API Book through Apress Alpha Program. Today, the Pro ASP.NET Web API book is now available on Amazon for pre-order

I am proud to announce that the Pro ASP.NET Web API book is available through Apress Alpha Program and you can get access to the early bits and completed chapters now.

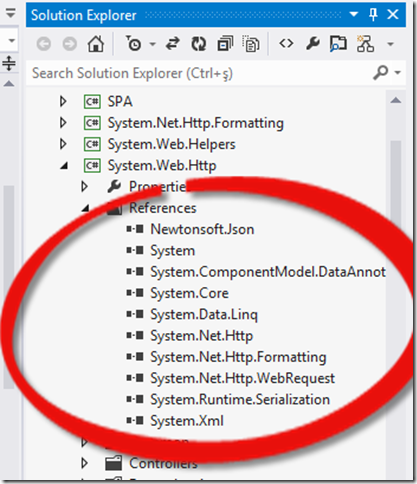

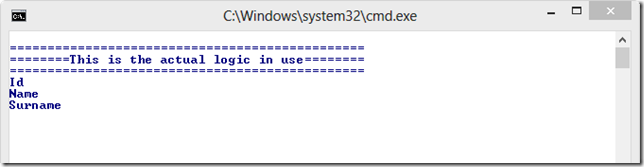

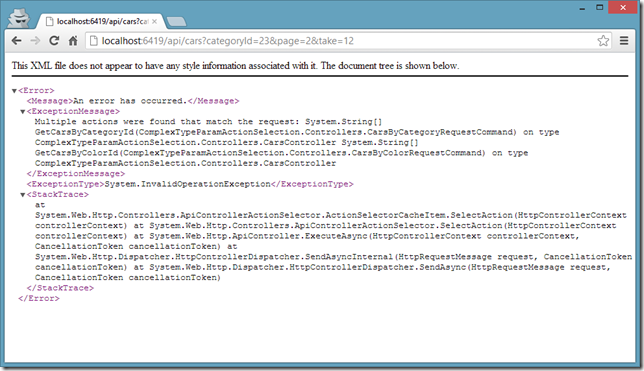

In this post, we will see how ComplexTypeAwareActionSelector behaves under the covers to involve complex type action parameters during the action selection process.

We will see how to make complex type action parameters play nice with controller action selection in ASP.NET Web API by using ComplexTypeAwareActionSelector from WebAPIDoodle NuGet package.

Yesterday, I received an awesome e-mail telling me that I have been given the Microsoft MVP award on ASP.NET/IIS for 2012.

How to use complex type action parameters in ASP.NET Web API and involve them inside the controller action selection logic

Writing asynchronous .NET Client libraries for your HTTP API and using asynchronous language features (aka async/await) and some deadlock issue you might face.

How to handle ModelState Validation errors in ASP.NET Web API with an Action Filter and HttpError object

How to most efficiently deal with asynchrony inside ASP.NET Web API HTTP Message Handlers with TaskHelpers NuGet package or C# 5.0 asynchronous language features

Gleen Block explains why we need ASP.NET Web API when we already have ASP.NET MVC and what can we achieve with ASP.NET Web API

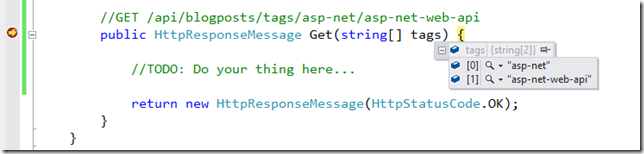

ASP.NET Web API has a concept of Catch-All routes but the frameowk doesn't automatically bind catch-all route values to a string array. Let's customize it with a custom HttpParameterBinding.

How to disposing resources at the end of the request lifecycle in ASP.NET Web API with the RegisterForDispose extension method for the HttpRequestMessage class

In this post we will see how run the Content Negotiation (Conneg) manually in an ASP.NET Web API easily.

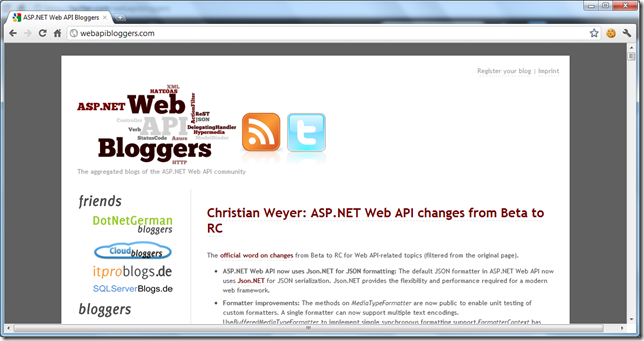

Couple of days ago, @AlexZeitler has launched WebAPIBloggers web site which aggregates the blog posts of several bloggers who write about ASP.NET Web API.

Let's see how we can end up with a deadlock using the C# 5.0 asynchronous language features (AKA async/await) in our ASP.NET applications and how to prevent these kinds of scenarios.

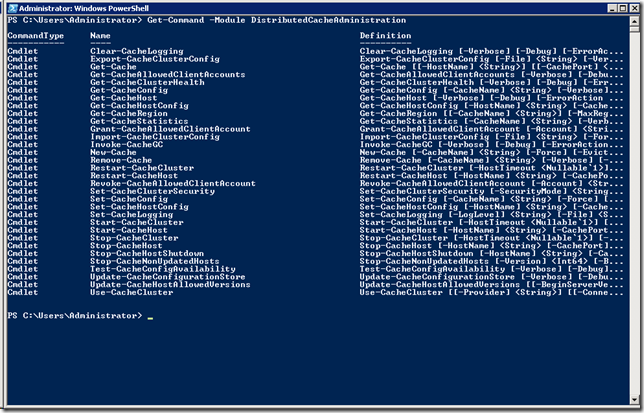

I started to use Windows Server AppFabric for its distributed caching feature and I wanted to take a note of the useful PowerShell commands to manage the service configuration and administration.

Today, I was at Computer Engineering Department of Mugla University and I gave two introduction talks on MS Web Platform and ASP.NET MVC 101.

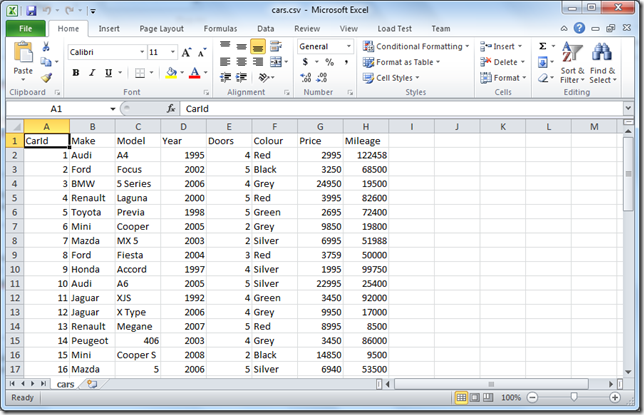

In this post, we will see how to create a custom CSVMediaTypeFormatter in ASP.NET Web API for comma-separated values (CSV) format

We will see how we can involve action selection process in ASP.NET MVC with ActionNameSelectorAttribute with a real world use case scenario.

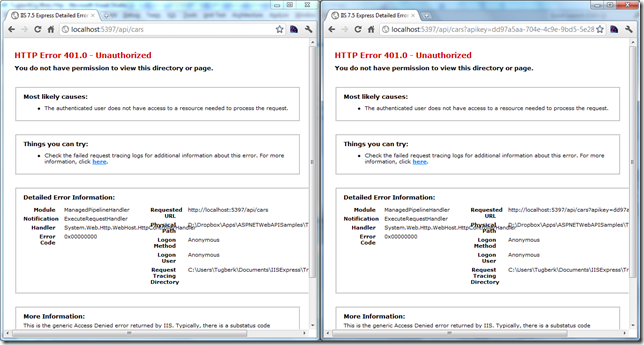

We will see how API key authorization (verification) through query string would be implemented In ASP.NET Web API AuthorizationFilterAttribute

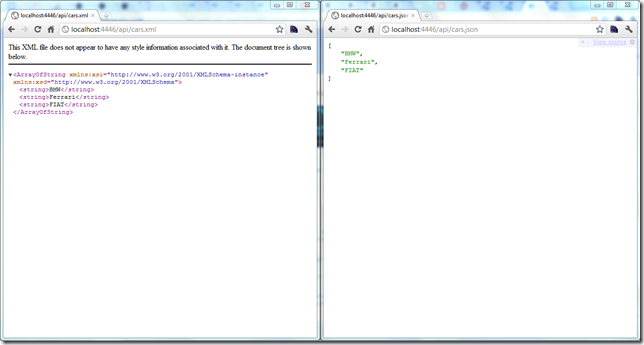

In this post, we will create RouteDataMapping. This custom MediaTypeMapping will allow us to involve the decision-making process about the response format according to RouteData values.

We will see how Content-Negotiation (Conneg) Algorithm works on ASP.NET Web API with MediaTypeFormatters and MediaTypeMappings

In this post, you can make Autofac work with ASP.NET Web API System.Web.Http.Services.IDependencyResolver. Solution to the 'controller has no parameterless public constructor' error.

I'm trying to show you what new C# 5.0 can bring us in terms of asynchronous programming with await keyword. Especially on ASP.NET MVC 4 Web Applications.

I would like to point you those resources (Tutorials, Videos, Samples) in order to get started with ASP.NET Web API.

This is the #2 of the series of blog posts which is about some core scenarios on ASP.NET MVC: A Way of Working with Html Select Element (AKA DropDownList) In ASP.NET MVC

This is #1 of the series of blog posts which is about some core scenarios on ASP.NET MVC: File Upload With HttpPostedFileBase Class

See how WCF Web API Plays Nice With ELMAH. This blog post is a Quick introduction to WCF Web API HttpErrorHandler

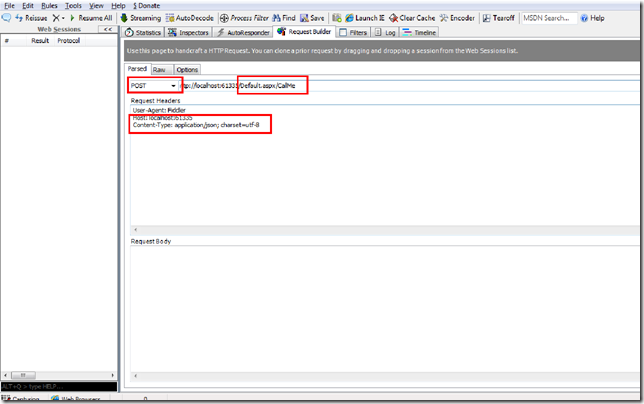

In this quick post, I will show you a way of implementing ASP.NET MVC Server Side Remote Validation just like ASP.NET MVC Remote Validation

This blog post demonstrates how to implement Donut Hole Caching in ASP.NET MVC by Using Child Actions and OutputCacheAttribute

This post shows the implementation of ASP.NET MVC Remote Validation for multiple fields with AdditionalFields property and we will validate the uniqueness of a product name under a chosen category.

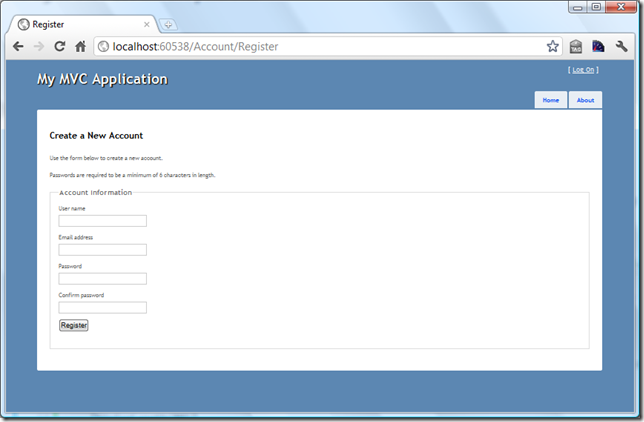

This blog post will walk you through on implementation and usage of ASP.NET MVC Remote Validation. As a sample, we will validate the availability of the username for membership registration.

In this blog post, we will see how we set up our environment for xUnit.net Unit Testing Framework. This is the first blog post of the blog post series on Unit Testing With xUnit.net for ASP.NET MVC.

We will see How to detect errors of our ASP.NET MVC views on compile time inside Visual Studio.

One of the aspect of SEO (Search Engine Optimization) is canonicalization. In this blog post, we will see how easy to work with IIS Rewrite Module in order to remove evil trailing slash from our URLs

In this post, we'll see how easy to work with JQuery AJAX API on ASP.NET MVC and how we make use of Partial Views to transfer chunk of html from server to client with a toggle button example.

One of the best Javascript WYSIWYG Editors TinyMCE is now up on Nuget live feed. How to get TinyMCE through Nuget and get it working is documented in this blog post.

In this blog post we will see how to consume a web page methods using JQuery on ASP.NET Web Forms and use ASP.NET page methods as services. You will find some cool stuff about other things as well :)

Couple of days ago I took the Exam 70-515, TS: Web Applications Development with Microsoft .NET Framework 4 and I am gonna walk you through it in this post

This awesome blog post will demonstrate how to create a complete, sub-grouped product list in a single grid. Get ready for the awesomeness...

In this blog post, we will see how to handle multiple checkboxes inside a controller in ASP.NET MVC. We will demonstrate a sample in order to delete multiple records from database.

In this blog post, we will see how ClientIDMode property of Web Controls makes our lives easier. Also, we will demonstrate couple of scenarios on how it works...

Solution to an annoying error message! You are getting 'Could not write to output file 'c:\Windows\Microsoft.NET\Framework\....' message? You are at the right place.

In this blog post, we will see how to run ASP.NET MVC application under IIS 6.0 and IIS 7.0 classic mode with some configurations on IIS and Global.asax file...

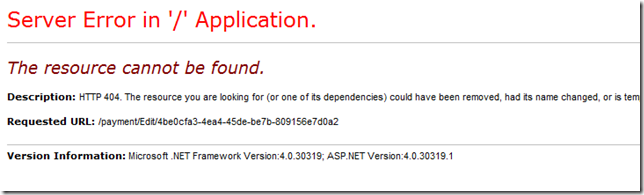

This post is a quick demonstration of how you can throw HttpException of 404 manually from a controller on ASP.NET MVC when the model you're passing is null

Microsoft MIX11 : Students & Academic Staff Discount is Available For the Conference / The chance that every geek student wants to catch!

SQL Injection and Lethal Injection... They are both dangerous and they can be easily fatal. But how? What is SQL Injection and how it can effect my project? The answers are in this blog post.

After the release of ASP.Net MVC RC 2, we are now waiting for the RTM release but some of us wanna use RC 2 already... But how to deploy it on a shared hosting acount is the mind-exploding problem...

I assume that some of you folks have tried that in your ASP.Net MVC applications and try to figure out why it doesn't work. Well, I have figured it out...

Most of the Asp.Net developers are using Membership class of Asp.Net and in this blog post we will see how to send e-mail to all of the membership users at once...

Sometimes we get sick to put our avatar pic. to every web site that we have registered to view our avatar. In this point gravatar.com helps us.

You created a cookie on you asp.net forms application now you would like to delete it. This quick article show how to do the trick...

On the 17th of Sepetember in 2010, Microsoft released a advisory for a very serious ASP.NET vulnerability which will cause to view data, such as the View State

Country DropDownList for Asp.NET developers ! Save a lot of time by copying and pasting this code to your application for a country dropdownlist. Do not create it from scratch :)

After you read this article, you will be able to use the 'Switch Case' Function on your C# Projects. This function becomes so handy with DropDownList & RadioButtonList !

This article will give you an idea to validate a checkbox in ASP.Net 3.5 ! It is so easy to implement and so handy to use !

ASP.Net Chart Control On Shared Hosting Envronment (Parser Error) - Deployment of Chart Controls On Shared Hosting Environment Properly...